Deep Dive - What is Tier IV?

21 Oct 2021, by Slade Baylis

| In our Deep Dive series, we dive into available technologies to keep you informed and up-to-date with what’s out there and how to best utilise it to empower your own business. |

It’s hard not to be concerned about the reliability of you own hosting, especially when you hear news about Facebook and Instagram being down due to “networking issues”. These platforms, which millions of people around the world rely on, invest large amounts of money into making sure their infrastructure is stable and reliable. Yet still, even they can run into issues that cause their systems to go down. So if even the big players have trouble making their systems stable, what can smaller businesses do to protect themselves from downtime? The answer is choosing to partner with a data centre that goes the extra mile to make their systems as reliable as possible.

It might surprise you to know this, but most data centres in Australia do not go this extra mile. Within the industry there are standards and certifications that exist which measure how reliable and redundant data centre infrastructure is. Most data centres aim to make their systems reliable, but few decide to go all the way. Micron21 is one of the few Australian data centres to decide that the benefits are worth the cost. In an Australian first, Micron21 achieved one of the highest certifications in the industry, known as Tier IV. In fact, Micron21 is the only Australian owned and operated data centre to have full Tier IV accreditation status.

To fully understand what this means and the benefit it provides to our customers, we’ll need to go into detail about these standards and how they differ from one another.

The Uptime Institute & Tiered Ratings

The Uptime Institute has long been the standard bearer in setting standards for data centre design and reliability. Created in 1993, their aim was to maximise the efficiency of data centres and to that end they created a tiered data centre rating system. This rating system allowed for objective and reliable methods for comparing the performance and reliability of the infrastructure of one site to another. It quickly became and has remained the international standard for data centre performance and redundancy.

Alternate standards do exist, such as one developed by the American National Standards Institute (ANSI). These standards provide information about power, cooling, and networking reliability. However, what’s really important to note here is that they only provide them as optional informative additions, rather than being incorporated into the standards themselves. Due to this, it’s sometimes seen as a way for data centres to get some form of accreditation, without having to meet the rigorous criteria laid out by the Uptime Institute.

The Uptime Institute’s tiered system is divided into four tiers, each which define standards for maintenance, cooling, power, and fault-tolerance. The tiered rating system is progressive, meaning that each tier incorporates the standards of the tiers below it. Their goal was not to measure the quality of data centres against one another, but rather to set criteria for allowing organisations to know whether a particular system will be able to help them meet their business objectives. For example, running a simple single page website out of a Tier I facility could be the perfect solution. However, if you were running a mission critical application that your business relies on out of that same Tier 1 facility, it would likely be a nightmare waiting to happen.

Tier I – Basic Capacity

A data centre that is rated as a Tier I facility is one that provides dedicated site infrastructure to support information technology beyond what is provided in a regular office setting.

What that means is that the infrastructure includes:

- A dedicated space for all IT systems

- Uninterruptable power supply/s (UPS) to prevent power spikes, sags, and momentary outages

- Dedicated cooling equipment that won’t get shut down outside of normal office hours

- An engine generator to protect that infrastructure from extended power outages

Whilst definitely a step up from running systems within an office, this tier is generally only suited for systems where downtime would have a negligible cost to a business. For anything where the business operations would grind to a hold if systems were down, it’s recommend to choose a facility with a higher rating.

Tier II – Redundant Capacity

A tier II facility meets the criteria above but also provides redundancy for critical power and cooling components. The intention is that this will provide greater protection against downtime in the case of failure of any one of these systems. It also means that data centre engineers are able to perform select maintenance on certain equipment without requiring services to be brought offline.

This cooling redundancy can be provided by cooling equipment such as:

- Chillers

- Cooling Units

- Heat Rejection Equipment

- Pumps

This power redundancy can be provided by equipment such as:

- UPS Modules

- Engine Generators

- Energy Storage

- Fuel Tanks

- Fuel Cells

This tier is better suited for hosting more crucial business systems, though it should be noted, that whist the extra systems around power and cooling do provide added protection, certain maintenance tasks could still require maintenance windows and downtime of client systems.

Tier III - Concurrently Maintainable

Whereas the primary focus of Tier II facilities is to increase reliability, the focus of Tier III instead is primarily to increase redundancy for the purpose of allowing maintenance without downtime. The goal here is to setup infrastructure in such a way that each and every component can be shut down or replaced entirely without impacting overall system availability.

A Tier III facility is able to:

- Replace or perform maintenance on equipment without any downtime for business systems

- Have power and cooling delivered to critical components through a primary path, but also to a secondary redundant path

Due to that flexibility with maintenance, a Tier III data centre is able to guarantee a higher level of up-time than Tier II rated facilities. Due to this, organisations are able to host production systems without worrying if systems will need to be shut down periodically for routine maintenance of the infrastructure.

Tier IV – Fault Tolerance

The final and highest rating for a data centre is Tier IV. This tier adds onto the tier above it, expanding even further on the requirements for fault tolerance. The main difference between a Tier III and a Tier IV facility is that a Tier IV facility is set up with (at a minimum) 2N+1 fully redundant infrastructure.

What does this mean exactly? Well without getting too “techy”, the easiest way to understand this is through the equation “2N+1”. In that equation the “N” represents the bare minimum of required components for an IT system to operate. Put simply then“2N” means that this facility has twice as much resources/capacity/components in place as are needed for systems to operate. And as you can infer ”2N+1” goes that one step beyond and also has additional backup available on top of this. What this allows for is certified 100% uptime capability on things like power and cooling infrastructure.

To understand how a Tier IV data centre works in reality, let’s look to Micron21 Data Centre as the example. We’ll detail how both the power and cooling infrastructure has been designed and implemented to achieve that level of redundancy.

The Micron21 Data Centre – The first Tier IV Data Centre in Australia

At Micron21 we offer services to businesses of all sizes. From small up-and-starter businesses with our Shared Web Hosting services, to large enterprises and Government with our VMware platform. However, the aim when designing our data centre was to be able to house the most mission-critical services imaginable. With that aim we set out for and achieved the highest rating from the Uptime Institute and were the very first in Australia to get it.

Not only did we go for the highest available accreditation, but the Uptime Institute themselves have acknowledged that we have far exceeded the top standards they set. For our power infrastructure, we have between 3N and 4N redundancy, which means we have between three and four times as much capacity as required. Our cooling infrastructure is the same with most systems having 3N redundancy and others having 4N. Though it’s not captured or required in the standards, we also decided to follow those same principles and used those same standards to build our core network.

In implementing and testing all of the above, we had to fly qualified independent electrical and data centre engineers in from Singapore for a week to evaluate our redundancy. They performed a battery of tests (pun intended) that systematically eliminated a core piece of infrastructure before moving onto scenarios that involved infrastructure combinations, i.e. power and cooling simultaneously. All this was performed with the mains power being completely off and with our production environment still running smoothly! And yes, we passed with flying colours!

You could say that Micron21 would be a “Tier V” data centre, if such a tier was to be created!

The question remains though - how does this look in practice? It’s easy to get bogged down in the weeds when looking into technology standards, so instead we’ll walk through how it’s actually been implemented in our own power and cooling infrastructure.

Power

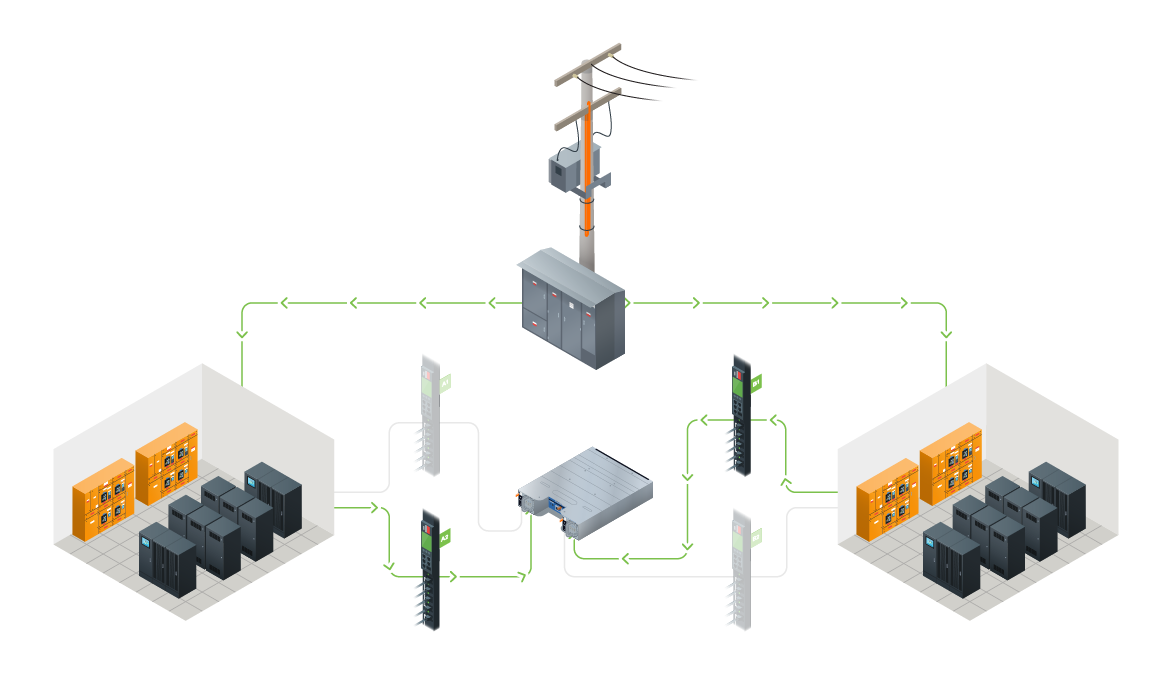

The Micron21 Data Centre is designed to ensure constant, unfaltering power. Our power supply contingencies eliminate the risk of failure and the associated data corruption or hardware damage that can result. Every device within the data centre is provisioned with two power inputs. This procedural standard ensures continued device operation in the event of a single power source failure.

Each cable to a device's power supply runs from one of two independent Power Distribution Units (PDUs) within each rack. In the unlikely event that a cable connecting to the device fails, the alternative cable maintains the power supply. Each rack's two PDUs will source electricity from one of two Uninterruptible Power Supplies (UPSs).

Power usage is load balanced across the data centre. Each of the four IT load power feeds – feeding the two power distribution units attributed to each rack – is supplied with a dedicated uninterruptable power supply. The four uninterruptible power supplies are split and housed within two independent power rooms. Each of these power rooms are physically independent and fire rated.

Micron21 power rooms are fed from external independent mains power connections.

In the event the external mains power source fails, each Micron21 power room is provisioned with dedicated backup generator capacity. Each generator is capable of indefinitely powering the entire Micron21 Data Centre if required.

Cooling

Devices are housed in carefully monitored, climate-controlled environments. We adopt a 'hot aisle, cold aisle' methodology to ensure devices remain cool while secured in our purpose-built Emmerson Racks. Each rack is supported with XDP top-of-rack cooling. XDP units provide enough power to cool the load of up to four racks simultaneously.

Every four top-of-rack air conditioners are supplied by four independent cooling sources to ensure a constant flow of refrigerated gas.

Each of the independent cooling sources is linked to buffer tanks containing 10,000 litres of continuously chilled water. The water temperature is maintained by two independent industrial-strength chillers.

During cooler weather periods, our innovative climate control system sources and processes naturally cold air from the outside atmosphere. The industrial chillers facilitate the process using only their radiators instead of electric compressors to ensure reliable cooling infrastructure that is optimised for environmental sustainability.

Tertiary condensers are mounted on the roof of the data centre as an additional cooling measure. The condensers supply in-row cooling appliances every four racks, to ensure cooling is maintained in the unlikely event that any or all of the top-of-rack cooling systems go offline.

Redundant Capacity – Going the extra mile

As you can see, implementing Tier IV level redundancy is no easy feat, which is why most providers stop short to save on time, effort, and money. Micron21, however, aims to be the best and most reliable option available out there. This is why we decided to go for the highest accreditation possible. It’s our belief that our highly reliable infrastructure means that we are the best option for businesses looking to host their mission critical systems.

What does Tier IV mean for you?

With some components being protected by having up to four times as much infrastructure as required, and most others having at least three, we go above and beyond to make our systems as reliable as possible. As such, the Micron21 Data Centre not only meets the criteria for being a Tier IV data centre, but also exceeds them. This extra resilience means that we can and do provide 100% uptime guarantees on our infrastructure.

It can be interesting to understand the technical side of how this is all implemented, but the much more important factor is how this serves our client's needs. By having this level of protection built into our facility, every service, from the smallest web hosting account to the largest virtual machine, all get that peace of mind knowing that their services will always be protected from downtime.

Interested in hosting your website or colocating your server with Micron21?

Are you interested in hosting your website on one of our Shared Web Hosting plans? Or perhaps you would like to get a quote for colocating your servers with Micron21? If so, please reach out to our Sales team on 1300 769 972 (Option #1) and we’ll be able to discuss your unique requirements with you.